When AI evolves quarterly, but your organization plans annually

Organizations should let departments choose the right balance between humans and AI, and focus on outcomes, not rigid processes, to keep pace with rapid technological change. ...

by Faisal Hoque, Paul Scade Published February 18, 2026 in Artificial Intelligence • 11 min read

Organizations face a fundamental challenge when implementing artificial intelligence (AI) tools. On the one hand, the safest and most efficient way to manage major technological change is to build capabilities methodically, working step by step to create the culture and the technical capacity required. At the same time, AI has such deep, transformative potential that business leaders rightly worry their companies may be sidelined by “AI-first” enterprises if they are too cautious in their approach. Balancing the need to get AI adoption right with the need to move quickly will be one of the defining business challenges of the next 10 years.

This tension is not unique to AI. China’s approach to the modernization of its navy shows how these two tracks can co-exist. Despite its eagerness to match the power of US carrier battle groups, China has followed a meticulously patient maturity curve in developing its naval warfare capacity. From the purchase of foreign aircraft carrier hulls for study in the 1990s to the start of work on a nuclear-powered supercarrier of its own design last year, China has moved step by careful step over nearly three decades to acquire the cultural, operational, and technical skills needed to conduct carrier warfare effectively. But in other areas, the Chinese military has raced past its competitors to acquire entirely new, world-leading capabilities. In 2012, China deployed the world’s first operational anti-ship ballistic missiles, capable of threatening US carriers from thousands of miles away. Rather than following a sequential path that passed through every intermediate generation of technology, China instead leapfrogged ahead to its target end state, creating a weapon with the potential to change the balance of power in the Pacific.

This strategically sophisticated dual-track approach – methodical progression where complex dependencies exist and strategic leapfrogging direct to advanced capabilities where opportunities emerge – offers a valuable model for businesses engaged in AI transformation planning. As is the case with the construction and use of supercarriers, it is simply impossible to develop and operate certain AI capabilities without the right infrastructure, governance frameworks, and organisational readiness. At the same time, other AI applications can be deployed rapidly and without sequential development, either because existing capabilities can be creatively recombined or because of the absence of legacy constraints acting as barriers to implementation.

Taking advantage of both approaches in parallel requires diagnostic precision. This article presents an approach for determining when to leapfrog ahead with AI and when to follow a linear maturity model. Drawing on our experience working with government agencies and private enterprises, we have developed a five-stage AI maturity model that serves as a diagnostic tool rather than a prescriptive roadmap. By mapping constraints at a granular level, leaders can identify where steady progress along the maturity curve is essential and where leapfrogging is not just possible but optimal. The result is an approach that moves businesses past the false choice between slow and steady evolution and rapid revolution, matching transformation strategies to organizational realities while ensuring both immediate competitive advantage and long-term structural strength.

Research conducted on 500 publicly traded companies showed that matching technology projects to organizational maturity levels was an important predictor of overall financial success for companies. In the years since, our experience of using maturity models to guide transformation at large businesses and in major government agencies has confirmed this insight repeatedly. Indeed, over the past decade, maturity models have become part of the mainstream discourse around tech transformation in business.

In many ways, AI implementation is no different from the implementation of any other type of technology: attempting to run before you can walk will inevitably lead to failed initiatives and wasted money. So, the default approach for decision-making around AI should be firmly rooted in an organization’s technological maturity.

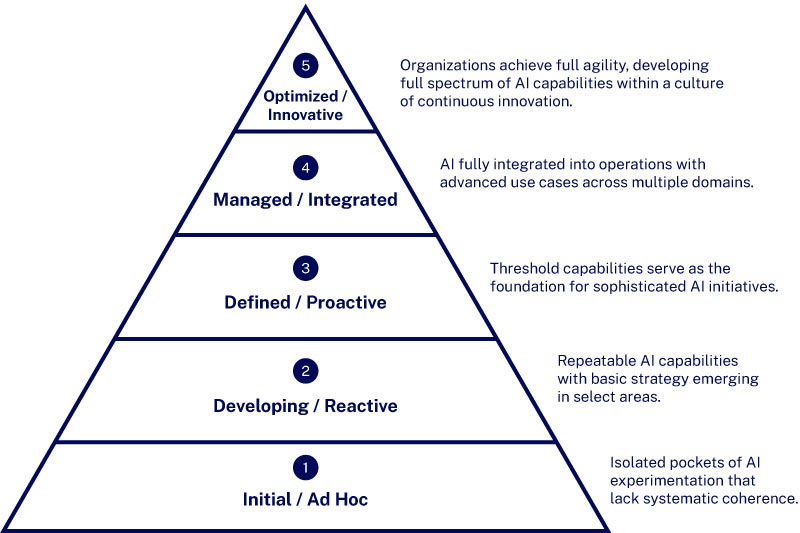

As we outline in a forthcoming book – Reimagining Government by Faisal Hoque, Erik Nelson, Tom Davenport, et al. (Post Hill Press) – our recent work in the defense industry led to the development of a five-stage maturity model that is now being used to guide AI implementation in government agencies. This model can be applied directly to AI innovation programs in business enterprises.

The model tracks the evolution of an organization’s AI maturity across five stages.

Stage 1: Initial/Ad Hoc. Isolated pockets of AI experimentation that lack systematic coherence. No formal organization-wide AI strategy. Technical infrastructure is ragmented. Successful initiatives focus on predictive/analytic AI and workflow automation in narrowly defined use cases.

Stage 2: Developing/Reactive. Repeatable AI capabilities with basic strategy emerging in select areas – typically simple applications like chatbots or basic predictive analytics. Implementations address immediate needs rather than strategic objectives. Organizations recognize the importance of data quality. Some coordination between AI initiatives emerges.

Stage 3: Defined/Proactive. Organizations achieve ”threshold capabilities” that serve as the foundation for sophisticated or large-scale AI initiatives. Comprehensive AI strategy that is aligned with broader strategic goals. Integration between key process areas begins along with the standardization of processes for project implementation, including ethical guidelines and risk management frameworks. Mature data management capabilities. Ability to implement complex generative AI capabilities.

Stage 4: Managed/Integrated. Disciplined management with quantitative performance measurements. AI is fully integrated into operations with advanced use cases across multiple domains. Cross-functional AI teams emerge with robust governance frameworks. Established processes for continuous model improvement, enabling simple agentic AI systems.

Stage 5: Optimized/Innovative. Organizations achieve full agility, developing predictive and prescriptive AI capabilities while widely adopting generative AI solutions within a culture of continuous innovation. Seamless human–AI workflows. Sophisticated governance capabilities. Rapid prototyping and deployment. Data infrastructure supporting real-time adaptive systems.

The maturity model provides the foundational reference point for understanding an organization’s capabilities and for mapping its AI adoption journey. The normal trajectory will involve the sequential acquisition of technical capabilities in predictive/analytic AI, generative AI, and then agentic AI. In parallel, the organization will move from isolated pockets of experimentation to a coherent, organization-wide strategy for managing and implementing new AI capabilities. This technical and strategic evolution will be grounded in increasingly sophisticated infrastructure and governance capabilities, and a growing cultural appreciation and acceptance of AI in day-to-day workflows. Moving across these stages requires overcoming limitations that would hamper the successful implementation of initiatives at later levels.

“It is important to recognize that leapfrogging cannot occur at the whole-organization level.”

In addition to mapping out the typical trajectory for AI implementation, the maturity model can also be used as a diagnostic tool to identify where an organization can leapfrog ahead to new capabilities without passing through all intermediary points (e.g., implementing a generative AI solution without first acquiring a foundation in predictive/analytic AI). It is important to recognize that leapfrogging cannot occur at the whole-organization level.

Startups, of course, are unburdened by legacy constraints and so can build from scratch around AI capabilities. But organizations with more than a few years of history are inevitably burdened with constraints – infrastructure, culture, systems, staff skillsets – that make it impossible to skip past whole stages on the maturity curve without potentially disastrous outcomes. Successful leapfrogging instead targets specific opportunities, deploying advanced AI solutions in comparatively localized, but impactful, contexts. For instance, a company with no high-level experience of analytic/predictive AI might seek to implement a generative AI knowledge management tool in one of its units.

In this context, the maturity model becomes a tool for identifying leapfrogging opportunities and for mapping constraints. The diagnostic process works backward from a survey of possible desired outcomes.

This granular approach reveals why leapfrogging is possible in some domains but not others. China pursued a maturity-driven path to carrier warfare capabilities because its target end state required building sets of tightly interwoven capabilities that could not be obtained independently (e.g., carrier construction, design, and maintenance all overlap and contribute to each other, and in each case, China needed to advance through at least three generations of technology, from small diesel-powered ships with a few dozen planes to nuclear-powered vessels with nearly 100 aircraft). In this case, there was no viable path direct from an initial lack of carrier knowledge to constructing and operating some of the most complex machines ever built. By contrast, the path dependencies required to move from China’s existing missile capabilities to novel antiship ballistic missiles were achievable, if not simple. This was a paradigm-shifting advance built on existing technological foundations with a comparatively small number of technical barriers to be overcome.

Insurance startup Lemonade jumped straight to an advanced AI maturity level when it launched in 2016 with an AI claims bot that operated with no human involvement.

Insurance startup Lemonade jumped straight to an advanced AI maturity level when it launched in 2016 with an AI claims bot that operated with no human involvement. In doing so, the company skipped both limited pilots and the more typical stage of AI-powered assistance for human claims workers. Lemonade now insures more than 2.6 million clients in the US and EU. Thanks to their leap ahead to relying on fully automated AI claims agents, Lemonade employs just 1260 employees as of May 2025, serving more than 2000 customers per employee. This is almost four times the rate at established insurance firms such as Progressive and Allstate.

Strategic leapfrogging becomes viable when specific conditions align. Using a maturity model as a diagnostic tool helps identify these opportunities by revealing where constraints are minimal or manageable, and where they are not. Here are five key indicators that leapfrogging may be viable:

Successful leapfrogging rarely depends on a single factor. Instead, multiple conditions must be assessed together when determining if leapfrogging is viable: strong mandates might overcome moderate technical constraints, or focused scope might compensate for limited cultural readiness. So, the diagnostic process must examine not just individual factors but also their interactions.

Leaders must resist pressure to accelerate in these contexts.

Conversely, this diagnostic tool also reveals contexts in which forcing the adoption of AI-first approaches will lead to failure, wasted resources, and erosion of organizational trust.

When these constraints combine – technical entanglement with regulatory oversight, or workforce resistance with mission-critical operations, for instance – the case for patient, incremental progression becomes overwhelming. Leaders must resist pressure to accelerate in these contexts. Premature leapfrogging attempts that fail spectacularly will undermine institutional confidence in AI initiatives. By contrast, methodical foundation-building will ultimately enable more ambitious transformation.

The path to successful AI adoption does not come from choosing between patience and speed, but from pursuing both simultaneously.

The path to successful AI adoption does not come from choosing between patience and speed, but from pursuing both simultaneously. To thrive in the AI era, organizations must reject the false choice between AI-first revolution and incremental evolution and instead commit to the diagnostic precision that will allow them to choose the right approach in any given context.

Using maturity models as diagnostic tools will allow them to map the constraints holding back individual initiatives while also pursuing the methodical foundation-building that is essential to delivering organization-wide change. This dual-track approach transforms AI adoption from a high-stakes gamble into a portfolio of calculated moves. The winners will be those who move most intelligently, matching their transformation planning to organizational realities with the same strategic sophistication that drives military decision making.

Executive Fellow at IMD and founder of SHADOKA and NextChapter

Faisal Hoque is a transformation and innovation leader with over 30 years of experience driving sustainable innovation, growth, and transformation for global organizations. For almost a decade, he has partnered with CACI – a $9 billion Fortune 500 leader in national security – to drive enterprise transformation and innovation across mission-critical government operations. A serial entrepreneur, Hoque is the founder of SHADOKA and NextChapter and is a three-time winner of Deloitte’s Technology Fast 50 and Fast 500™ awards. He has been named among Ziff Davis’ Top 100 Most Influential People in Technology, and serves as a judge for MIT’s IDEAS Social Innovation Program. Hoque is a best-selling and award-winning author of eleven books. His latest include the USA Today and LA Times bestsellers Reimagining Government: Achieving the Promise of AI (2026) and Transcend: Unlocking Humanity in the Age of AI (2025). His research and thought leadership have been recognized globally.

Honorary Fellow at the University of Liverpool and a partner at SHADOKA

Paul Scade is an historian of ideas and an innovation and transformation consultant. His academic work focuses on leadership, psychology, and philosophy, and his research has been published by world-leading presses, including Oxford University Press and Cambridge University Press. As a consultant, Scade works with C-suite executives to help them refine and communicate their ideas, advising on strategy, systems design, and storytelling. He is an Honorary Fellow at the University of Liverpool and a partner at SHADOKA.

2 hours ago • by Michael Yaziji in Artificial Intelligence

Organizations should let departments choose the right balance between humans and AI, and focus on outcomes, not rigid processes, to keep pace with rapid technological change. ...

February 17, 2026 • by Julia Binder in Artificial Intelligence

CSOs should harness artificial intelligence to embed sustainability at the center of strategy and growth. We explore how the most successful companies are already doing this. ...

February 12, 2026 • by Karl Schmedders, José Parra Moyano in Artificial Intelligence

Finance and digital strategy experts debate whether AI returns will materialize quickly enough to prevent a market correction. ...

February 10, 2026 • by Tomoko Yokoi in Artificial Intelligence

Tomoko Yokoi explains why GenAI isn’t delivering on its promise — and how clearer success metrics, upskilling, and purposeful scaling can finally unlock its value....

Explore first person business intelligence from top minds curated for a global executive audience