AI bubble or real shift? How leaders can prepare for what's next

Finance and digital strategy experts debate whether AI returns will materialize quickly enough to prevent a market correction. ...

by Michael R. Wade, Konstantinos Trantopoulos Published September 19, 2025 in Technology • 9 min read

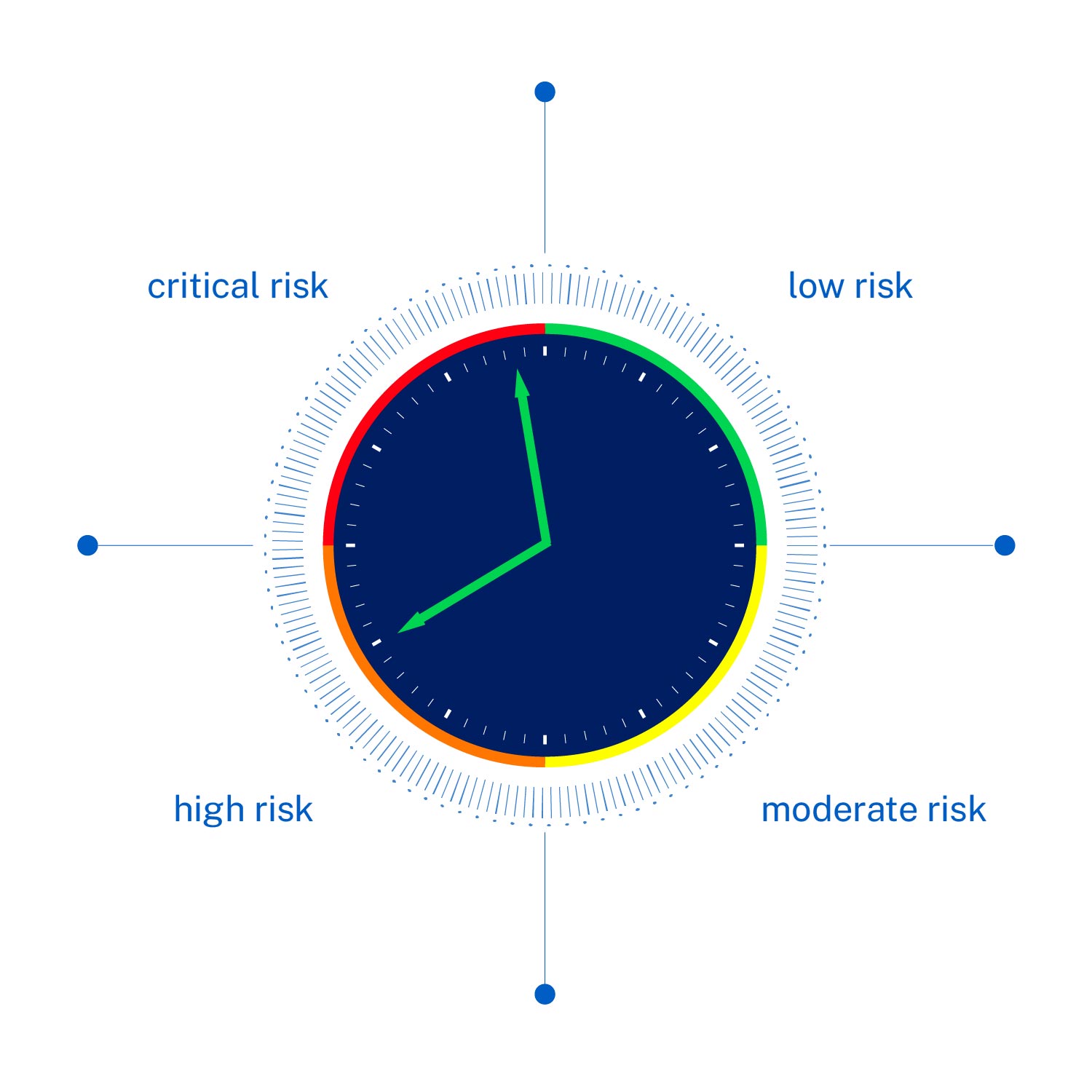

The IMD AI Safety Clock has jumped four minutes forward to 23:40, just 20 minutes to midnight. The largest single adjustment since its launch a year ago, the decision was driven by a new phase in AI development and deployment. Over the course of 12 months, the AI Safety Clock has advanced nine minutes closer to midnight, highlighting the accelerating pace of risk escalation.

In September 2024, the IMD AI Safety Clock was launched by the TONOMUS Global Center for Digital & AI Transformation. The clock is a metaphorical risk gauge that estimates how close humanity is to a tipping point where Uncontrolled Artificial General Intelligence (UAGI) could pose serious threats. Inspired by the Doomsday Clock, which measures existential risks from nuclear conflict, the AI Safety Clock tracks our distance from midnight, the moment when UAGI could become uncontrollable or dangerously misaligned with human interests.

Unlike a prediction of exact dates or events, the clock is designed as a signal of trends. It combines quantitative data from thousands of news feeds, research reports, and monitoring systems with expert qualitative judgment. The assessment focuses on three key dimensions: sophistication, or the reasoning and problem-solving abilities of AI, autonomy, or the independence with which AI systems operate without human oversight, and execution, or the ability of AI to act on and influence the physical world.

When the clock was launched in September 2024, it was set at 23:31, 29 minutes before midnight. This baseline reflected rapid advances in large language models, the first experiments with agentic AI, and limited execution capacity through robotics. It marked a starting point for tracking AI risk rather than a forecast of imminent catastrophe.

Breakthroughs in agentic reasoning, the rise of Chinese models, lack of unified regulations, and deepening ties between AI companies and defense actors pushed the risk profile higher.

By December 2024, the clock had advanced to 23:34, 26 minutes before midnight. “AI was moving faster than expected,” explains Amit Joshi, Professor of AI, Analytics, and Marketing Strategy at IMD. “Breakthroughs in agentic reasoning, the rise of Chinese models, lack of unified regulations, and deepening ties between AI companies and defense actors pushed the risk profile higher.” This was the first clear sign that risk was not static. Across the three dimensions, sophistication and autonomy were strengthening, while execution risk, especially in military contexts, began to emerge as a serious concern.

Just two months later, on 25 February 2025, the clock was updated again to 23:36, 24 minutes to midnight. This move reflected a confluence of five factors: the surge of open-source AI with models such as DeepSeek demonstrating how quickly and cheaply powerful systems could be built, the rise of autonomous AI agents from OpenAI, Anthropic, and Perplexity, massive investments from the United States and China, deregulatory shifts in U.S. policy championed by the Trump administration, and a corporate pivot away from safety toward rapid commercialization. “AI was no longer just a technological race; it had become a geopolitical arms race,” observed IMD President David Bach. “When speed and dominance are prioritized, safety becomes the casualty.” At this stage, autonomy had clearly become a defining concern, with AI agents taking on tasks once thought to be securely in human hands. Execution risks also grew, as deregulation and militarization began to accelerate deployment.

Since mid-2025, AI weaponization has intensified, with autonomous systems increasingly integrated into defense and cyberwarfare. In the United States, the Trump administration’s AI Action Plan has reinforced acceleration and global competition over caution. Large language models have reached new levels of sophistication, with OpenAI releasing GPT-5 in August 2025, Google DeepMind unveiling Genie 3 as a “world model” capable of building interactive simulations, and researchers introducing a new Hierarchical Reasoning Model to advance structured problem-solving. Apple, Meta, and others have also rolled out optimized or specialized models, fueling an unprecedented pace of progress.

On the autonomy front, the leaders of 2025’s agentic AI race are setting new benchmarks for scale and enterprise adoption. AWS Strands Agents provides developers with an open-source SDK to rapidly build and deploy autonomous systems, while Databricks Agent Bricks helps enterprises refine agents through synthetic data, evaluations, and optimization tools. Nvidia’s NeMo Agent Toolkit delivers large-scale monitoring and performance tuning, and Google Cloud’s Conversational Agents Console introduces Gemini-powered, emotionally aware assistants for voice and chat at enterprise scale. Salesforce Agentforce 3 has emerged as the gold standard for trust and control with its Command Center and 100+ prebuilt actions, while ServiceNow’s AI Agent Orchestrator acts as a control tower for coordinating fleets of agents across IT, HR, and customer service.

Together, these innovations signal that agentic AI has crossed from experimentation to mainstream deployment. “Sooner rather than later, all the GenAI that we use will have some agentic properties,” says José Parra Moyano, Professor of Digital Strategy at IMD. “And while trust and control are being put at the center of agentic design, giving work to the agents implies delegating.” A clear example came in August 2025, when Wells Fargo partnered with Google Cloud to roll out AI agents company-wide, proof that enterprise autonomy is no longer aspirational, but operational.

At the same time, decentralized approaches are emerging, such as Youmio’s blockchain-based AI agent network, enabling autonomous agents with wallets, memory, and on-chain verifiable actions to operate transparently across digital environments. However, making agentic AI sovereign actors on a blockchain magnifies both their power and their potential for harm. The risks include autonomy without oversight, cascading vulnerabilities, financialized exploits, and governance blind spots. Finally, agentic AI is no longer merely a source of guidance; it is being weaponized, with models now capable of actively executing sophisticated cyberattacks rather than simply advising on them. These developments underline the concern that agents are not only proliferating but becoming embedded in critical processes in ways that pose significant risks.

Execution risk has also leapt forward.

At the same time, decentralized approaches are emerging, such as Youmio’s blockchain-based AI agent network, enabling autonomous agents with wallets, memory, and on-chain verifiable actions to operate transparently across digital environments. However, making agentic AI sovereign actors on a blockchain magnifies both their power and their potential for harm. The risks include autonomy without oversight, cascading vulnerabilities, financialized exploits, and governance blind spots. Finally, agentic AI is no longer merely a source of guidance; it is being weaponized, with models now capable of actively executing sophisticated cyberattacks rather than simply advising on them. These developments underline the concern that agents are not only proliferating but becoming embedded in critical processes in ways that pose significant risks.

Execution risk has also leapt forward. China unveiled the world’s first humanoid robot store in August 2025 and showcased its ambition to dominate AI and robotics at the Beijing World Robot Conference. Most alarmingly, AI weaponization is accelerating. Ukraine revealed a massive arms buildup strategy, seeking billions in new investment to create a more reliable deterrent against Russia than Western guarantees through AI innovation, including domestically produced drones. Simultaneously, reporting revealed that China’s private sector, including tech companies and universities, is rapidly advancing military AI capabilities for the People’s Liberation Army. China’s military parade signaled an arms race fueled by scientific and technological advances, including AI-powered uncrewed vehicles, distinct AI drones, and underwater combat systems. Experts warned that the display of these systems alongside stealthy next-generation weapons not only signaled technological maturity but represented a strategic message of deterrence and alternative military orders. At the same time, China’s advances in gallium nitride semiconductor technology are establishing a quiet but decisive edge in weapon systems beyond U.S. export restrictions. In the US, the AI Action Plan pushes for integration of AI across critical infrastructure, calling for secure-by-design deployments and an AI Information Sharing and Analysis Center, while experts warn that embedding AI into critical infrastructure raises serious safety concerns, from adversarial vulnerabilities to opaque governance.

All these developments make it clear that AI systems are no longer limited to text or data analysis but are increasingly embedded in physical, military, and critical systems. “This is no longer about models predicting text,” says Misiek Piskorski, Professor of Digital Strategy, Analytics and Innovation and Dean of Executive Education at IMD. “It’s about AI systems that can reason, act independently, and execute decisions in both digital and physical domains. That raises the stakes dramatically and means that leaders must be AI-literate to understand how they can responsibly deploy AI models.”

The table below shows how risk has shifted across sophistication, autonomy, and execution over the first year of the clock:

| Assessment | Date | Clock Time | Sophistication | Autonomy | Execution |

| Initial | Sep 2024 | 11:31 PM (29 min) | Rapid LLM advances | Early signs of agents | Limited physical-world impact |

| First Update | Dec 2024 | 11:34 PM (26 min) | Open-source gaining momentum, competition in AI hardware intensifying | First Agentic AI breakthroughs | Defense ties and emerging military use |

| Second Update | Feb 2025 | 11:36 PM (24 min) | Open-source surge and emergence of cheap powerful models | Proliferation of autonomous agents | Deregulation, AI weaponization, corporate shift from safety |

| Third Update | Sep 2025 | 11:40 PM (20 min) | GPT-5, Genie 3, HRM advanced reasoning | Enterprise-scale deployment of agents, rise of decentralized networks | AI weaponization intensifying, arms race, humanoid robots, US AI Action Plan and integration with critical infrastructure |

The story of the IMD AI Safety Clock’s first year is not only about growing sophistication and autonomy, but about the rise of execution risks, the entry of AI into weapons, robotics, and autonomous platforms capable of acting directly in the world. The clock underscores a sobering reality: innovation is advancing at breakneck speed, while governance, accountability, and cooperation are struggling to keep pace. Unfortunately, without stronger safeguards and collective action, the hands of the AI Safety Clock are likely to continue to move forward. Midnight is not inevitable, but the trajectory we are on makes it harder each day to slow down the clock.

All views expressed herein are those of the authors and have been specifically developed and published in accordance with the principles of academic freedom. As such, such views are not necessarily held or endorsed by TONOMUS or its affiliates.

Professor of Strategy and Digital

Michael R Wade is Professor of Strategy and Digital at IMD and Director of the Global Center for Digital and AI Transformation. He directs a number of open programs such as Leading Digital and AI Transformation, Digital Transformation for Boards, Leading Digital Execution, Digital Transformation Sprint, Digital Transformation in Practice, Business Creativity and Innovation Sprint. He has written 10 books, hundreds of articles, and hosted popular management podcasts including Mike & Amit Talk Tech. In 2021, he was inducted into the Swiss Digital Shapers Hall of Fame.

Advisor and Research Fellow at IMD

Konstantinos Trantopoulos is an Advisor and Fellow at IMD, working with senior executives, boards, and investors globally on growth, value creation, and profitability. His work focuses on how organizations shape strategy and translate it into measurable value through people, leadership, processes, and emerging technologies including AI. His insights have appeared in Harvard Business Review, MIT Sloan Management Review, California Management Review, MIS Quarterly, Το Βήμα, and Forbes. He is also the co-author of Twin Transformation, available on Amazon.

February 12, 2026 • by Karl Schmedders, José Parra Moyano in Artificial Intelligence

Finance and digital strategy experts debate whether AI returns will materialize quickly enough to prevent a market correction. ...

February 10, 2026 • by Tomoko Yokoi in Artificial Intelligence

Tomoko Yokoi explains why GenAI isn’t delivering on its promise — and how clearer success metrics, upskilling, and purposeful scaling can finally unlock its value....

February 3, 2026 • by Yeun Joon Kim, Yingyue Luna Luan in Artificial Intelligence

Teams using AI are often perceived as less creative—regardless of their actual output. New research from Cambridge reveals the solution isn't less AI, but better collaboration design. ...

January 29, 2026 • by José Parra Moyano in Artificial Intelligence

Amid widespread predictions of the bursting of the AI bubble, José Parra Moyano demonstrates how leaders can focus on optimizing initiatives with strong value, data readiness, and employee endorsement. ...

Explore first person business intelligence from top minds curated for a global executive audience