AI bubble or real shift? How leaders can prepare for what's next

Finance and digital strategy experts debate whether AI returns will materialize quickly enough to prevent a market correction. ...

by Öykü Işık Published February 28, 2025 in Artificial Intelligence • 4 min read

The use of artificial intelligence (AI) offers game-changing opportunities for businesses to improve operations and scale, and companies are rushing to embrace these chances – yet fears over potential security threats have tempered enthusiasm.

Scaling broadens the attack surface, making industrial systems more vulnerable to cyber threats. AI models can be targeted by adversarial attacks, data poisoning, or model inversion techniques that expose sensitive information. The increased connectivity of industrial AI systems creates additional entry points for hackers.

Yet, by proactively integrating security measures into AI development and deployment, organizations can minimize risks while maximizing the potential of this tool.

Relying on traditional machine learning (ML), rather than the less-well-tested generative AI, industrial AI has been used for tasks including predictive maintenance, quality checks, and energy management for more than 15 years.

AI is most successful when organizations have specific and well-defined use cases, they know what they are measuring, and they have proprietary data. Industrial AI, the application of artificial intelligence in business settings including manufacturing, energy, or construction, clearly showcases the potential of AI. Relying on traditional machine learning (ML), rather than the less-well-tested generative AI, industrial AI has been used for tasks including predictive maintenance, quality checks, and energy management for more than 15 years. ML relies on pattern recognition, learning from the past to predict current needs, and the efficiency gains are proven.

“AI introduces unique attack surfaces, requiring proactive and AI-specific security measures. Implementing zero-trust AI, robust data security, and adversarial defenses is critical.”

As Cedrik Neike, managing board member and CEO of Digital Industries, Siemens, writes in a new report A New Pace of Change: Industrial AI x Sustainability: “In fact, industry has steadily been developing AI since the 1970s, making it reliable, secure, trustworthy, and suitable for industrial use. ‘Industrial AI’ now meets the requirements of the most demanding environments, enabling us to communicate with software, equipment, or machines in natural language, and helping us to design processes or even entire plants.”

Still, AI is changing the cyber threat landscape. AI introduces unique attack surfaces, requiring proactive and AI-specific security measures. Implementing zero-trust AI, robust data security, and adversarial defenses is critical. It is also crucial to remember that AI security is not just an IT issue, it’s a business priority affecting compliance, reputation, and trust.

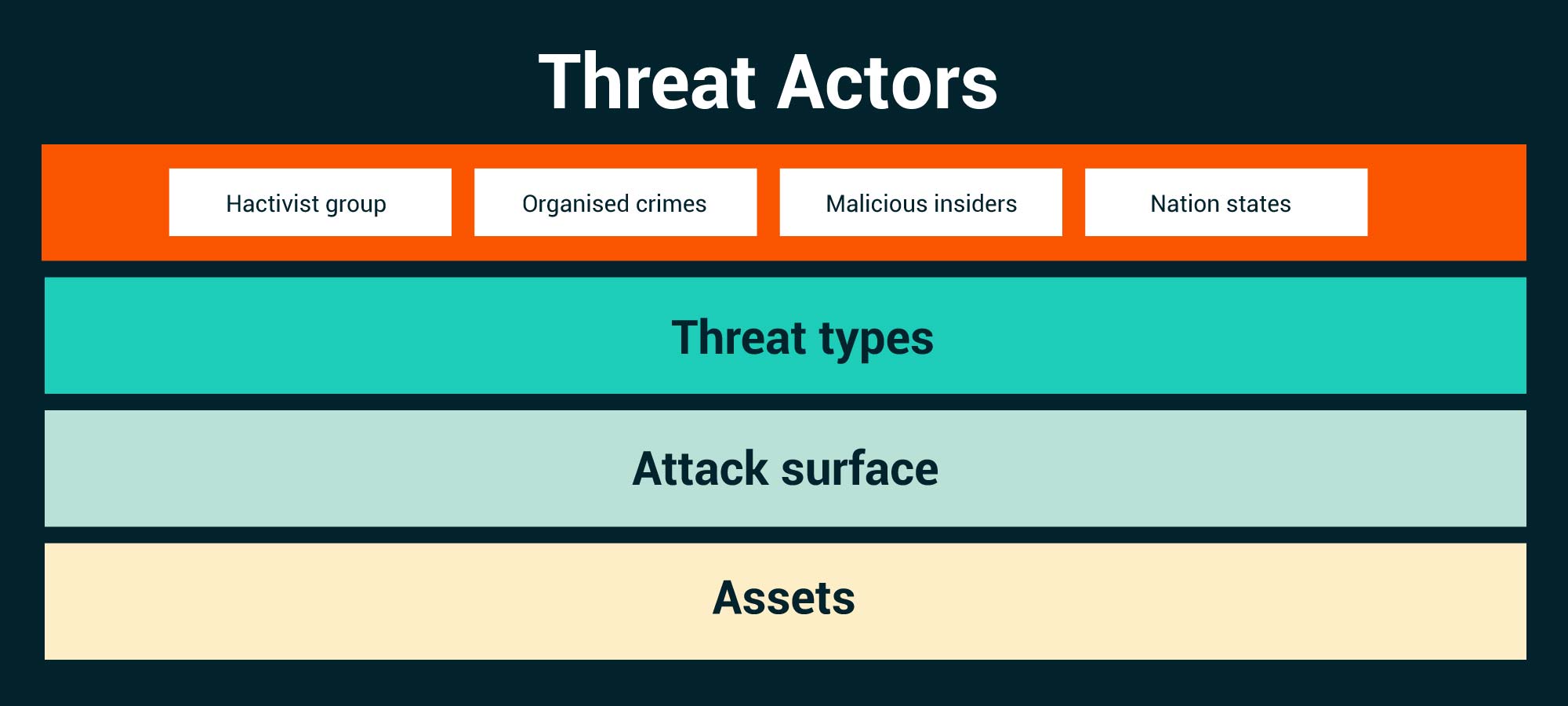

Building reliable, accurate, and secure AI requires attention to all four elements of the traditional threat landscape, from actors to types to surface to core assets. AI introduces the prospect of unique attack surfaces within these four threat entry points, requiring proactive and AI-focused security measures.

To mitigate risks, when building industrial AI processes, you have to follow a secure-by-design approach; you cannot just fix issues as they come up. A secure-by-design approach integrates security into the design and building of products, systems, and applications, creating AI that is secure by default. A big aspect of this is the AI vendor: if the vendor builds ignoring vulnerabilities, you will bring those into your organization. For example, while there was widespread enthusiasm for the lower cost of DeepSeek, the fast and less expensive Chinese AI software challenger to Silicon Valley stalwarts like OpenAI, Google, or Meta, independent security evaluations of DeepSeek have found a series of weaknesses, and exposed susceptibility to cyber threats including prompt injection attacks, jailbreaking or data poisoning. Cheaper options may not represent savings in the long term.

Mitigating cybersecurity risks when using AI requires a multi-layered approach that includes robust security measures, governance frameworks, and continuous monitoring. Here is a checklist for the five key strategies:

Professor of Digital Strategy and Cybersecurity at IMD

Öykü Işık is Professor of Digital Strategy and Cybersecurity at IMD, where she leads the Cybersecurity Risk and Strategy program and co-directs the Generative AI for Business Sprint. She is an expert on digital resilience and the ways in which disruptive technologies challenge our society and organizations. Named on the Thinkers50 Radar 2022 list of up-and-coming global thought leaders, she helps businesses to tackle cybersecurity, data privacy, and digital ethics challenges, and enables CEOs and other executives to understand these issues.

February 12, 2026 • by Karl Schmedders, José Parra Moyano in Artificial Intelligence

Finance and digital strategy experts debate whether AI returns will materialize quickly enough to prevent a market correction. ...

February 10, 2026 • by Tomoko Yokoi in Artificial Intelligence

Tomoko Yokoi explains why GenAI isn’t delivering on its promise — and how clearer success metrics, upskilling, and purposeful scaling can finally unlock its value....

February 3, 2026 • by Yeun Joon Kim, Yingyue Luna Luan in Artificial Intelligence

Teams using AI are often perceived as less creative—regardless of their actual output. New research from Cambridge reveals the solution isn't less AI, but better collaboration design. ...

January 29, 2026 • by José Parra Moyano in Artificial Intelligence

Amid widespread predictions of the bursting of the AI bubble, José Parra Moyano demonstrates how leaders can focus on optimizing initiatives with strong value, data readiness, and employee endorsement. ...

Explore first person business intelligence from top minds curated for a global executive audience