As artificial intelligence and, more recently, generative AI become increasingly common in corporate decision making, companies need to be aware of the broader risks arising from their common weaknesses.

AI powered systems and services have rapidly transformed digital capabilities across every economic sector. With this capacity comes a significant governance challenge. Unlike earlier systems, AI can answer questions or recommend courses of action based not on rules programmed by humans, but on those it has derived from its own analysis of large pools of data.

For AI to produce valuable insights, however, the right kinds of information is required to train it and form the assumptions embedded in the system. There are many examples of outright error or bias. One MIT study found that facial-recognition software worked much more effectively for white males than dark-skinned females, probably because of weaknesses in the training data. Amazon abandoned early efforts to use AI in recruitment assessment because of the latter’s tendency to privilege male applicants. Further complicating the matter, many AI tools produce only answers to questions; the reasoning behind them remains obscured in a high-tech black box.

Setting clear boundaries for AI

This need for AI is clear. Best practice in creating ethical corporate guardrails for AI is less so. The experience of Mastercard holds important lessons for companies as they wrestle with this issue. Any company that wants to deploy such powerful capability must create effective ethical-governance standards to avoid reliance on flawed advice that may lead to error or failure to meet compliance requirements.

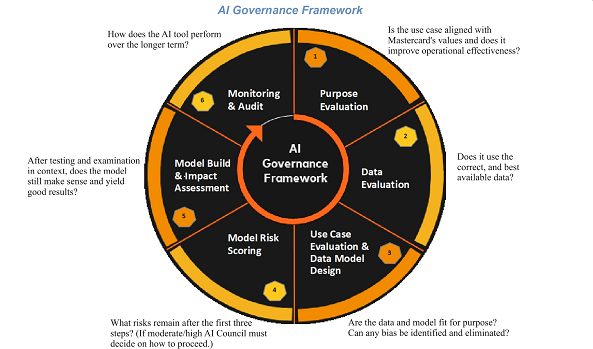

As the need for AI governance arises from a new digital capability, the temptation is to look for a straightforward technological fix. This is the wrong approach, for two reasons. First, tools ready for easy adoption may not exist. When Mastercard began its process of creating AI governance arrangements, executives scanned what was available and found very little on which they could draw. Since then, AI governance framework toolkits and templates have proliferated online, but there is no guarantee that these will apply to any given company’s circumstances without substantial revisions.

Second, and more important, focusing initially on which technological changes to make is to put the cart before the horse. Instead, in order to create and apply the right governance guardrails, companies must begin by looking at their own core purpose and underlying value, and consider arrangements that will make AI serve these.

The foundations of AI governance at Mastercard

Mastercard defines itself as “a technology company in the global payments industry that connects consumers, financial institutions, merchants, governments, digital partners, businesses and other organizations worldwide, enabling them to use electronic forms of payment instead of cash and checks.” It aims to facilitate transactions by acting as a conduit for sending information between merchants and the banks of buyers.

The company has a longstanding policy of adopting the most up-to-date technology to help it accomplish its mission. As early as 1973, it moved from telephone to use of a computer network for payment authorizations. Mastercard sees AI as the most recent innovation that can benefit its stakeholders; accordingly, it has positioned itself to become an Al powerhouse. This includes using technology to enhance its products, increase the efficiency and effectiveness of internal operations and, in particular, use AI to support its fraud prevention, anti-money laundering, and cyber-security processes.

Any such integration of AI, however, has had to occur within the strict framework of the company’s compliance regimes and values. An issue of particular relevance here is Mastercard’s focus on data protection. Indeed, because the company acts, in essence, as a data pipeline between merchant and bank, its policy is to limit the information it receives and sends to the minimum necessary to facilitate any given transaction. It does retain some data, but governs this using its Data Responsibility Imperative: a series of principles that go beyond government data-privacy mandates.

Underlying this policy, and everything else at Mastercard, is the company’s core value of dedication to decency, what it calls its Decency Quotient. Boiled down, this is a commitment that companies and employees will try to do the right thing.