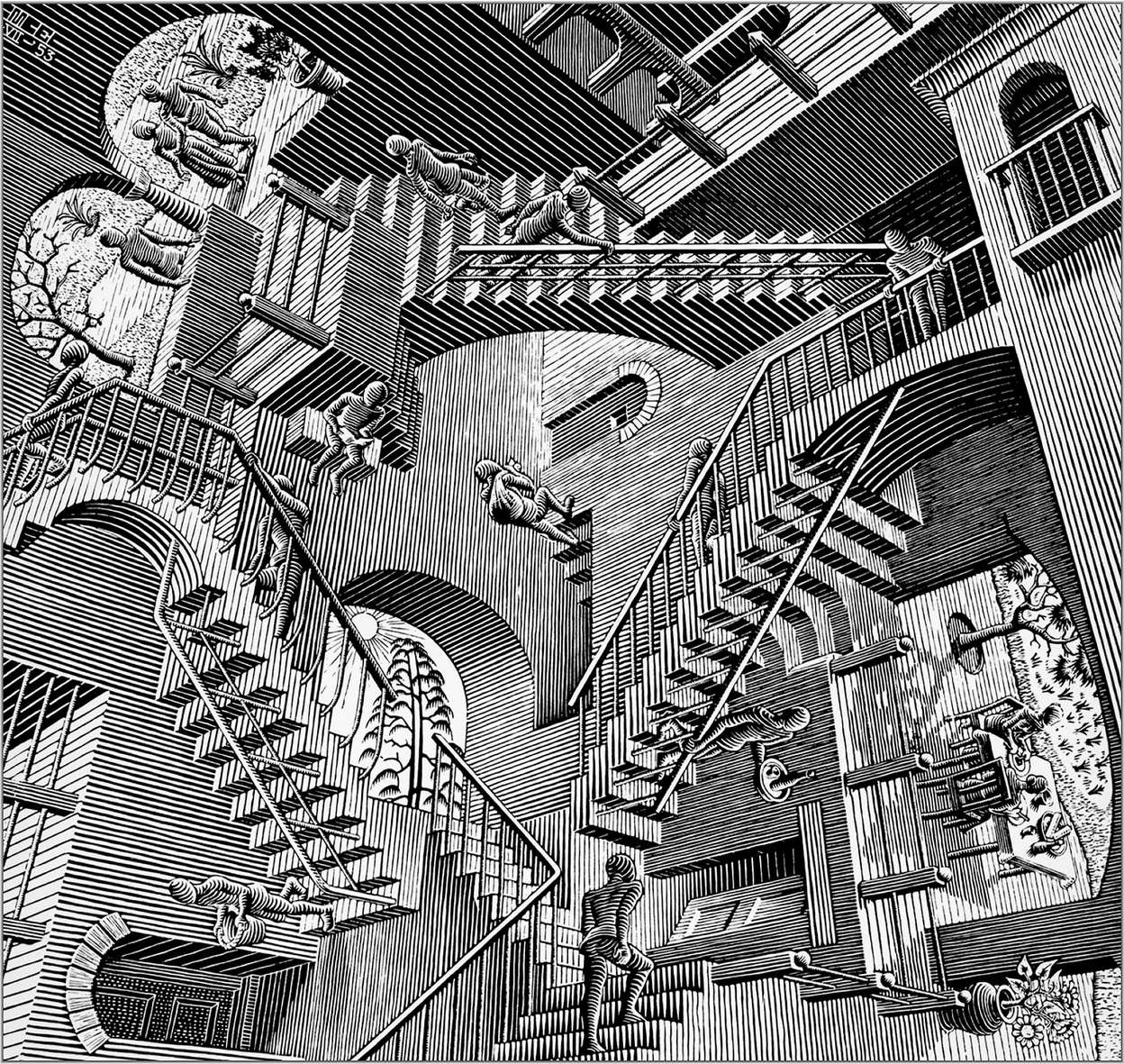

Domestic and international political dynamics, technological acceleration, and the era of poly-crisis and perma-crisis create events with consequences no one can foresee or fully understand. Existing playbooks – be they economic, strategic, organizational, or interpersonal – used by executives to guide their actions provide insufficient guidance. Similarly, the existing mental models they use to make sense of new realities prove increasingly inadequate. Decision-makers are told to “expect the unexpected”. That may sound good, but it is profoundly unhelpful and logically impossible: as soon as we expect something, it is not unexpected anymore. More fundamentally, trying to conceive of frame-breaking events and their consequences is based on prediction, and research shows that humans are not good at that. Our brains are designed to find regularity. We (over)simplify, rely on often inaccurate memory, and trust previous experience. All this leads us to expect and predict stability and continuity.

The more expertise we build up, the more we value our understanding of the world. We trust our intuitive ability to make sense of and understand the world and how it is evolving. At the same time, we have less and less of an incentive to question our existing mental models because doing so challenges our sense of our identity, expertise, and value. In volatile, changing, and complex circumstances, expertise can become a liability that is hard to overcome.

For this reason, I suggest to individuals, teams, and organizations that want to improve their ability to operate in complex and changing environments to learn to “unexpect the expected”. To do this, we must become aware of our existing mental models, develop a different way to perceive and interpret uncertainty, and – maybe most importantly – replace an implicit performance orientation with a deliberate and committed learning orientation in our engagement with the world. The first step is to let go of our need for certainty and actively embrace curiosity.

Letting go of familiar mental models is uncomfortable because it is cognitively demanding and emotionally challenging. It often feels like admitting we are wrong. Suspending our expertise to adopt the mindset of a novice learner can be especially difficult for experienced professionals and requires courage. Leading others through this shift demands strong, empathetic leadership.

In rapidly changing environments, success is less a function of past knowledge and more about how quickly and accurately we can learn. In such conditions, clinging to what we already know is more dangerous than exploring and embracing what we have yet to fully understand. That’s why learning through testing and disconfirmation – actively challenging our assumptions – is essential. So, how can we do that?

1. Embrace disconfirmation

One of the most effective ways to challenge entrenched thinking is to deliberately adopt a skeptic’s perspective – a disconfirming stance. This doesn’t mean being negative for its own sake. It means insisting on high standards of evidence before accepting something as true, even things we have learned in the past. In medicine, for example, an approach known as differential diagnoses helps to distinguish multiple plausible diagnoses and indicates tests that can help determine the true cause of experienced symptoms. In any organization that has developed the capacity for disconfirmation, you will regularly hear people say, “Have you tested this?” or “What does the data say?” This reflects the core logic of the scientific method: insisting on quality data and testing rather than defending ideas. This scientific mindset builds intellectual robustness. In business, we too often neglect this discipline, preferring confirmation over challenge. We must work hard to reverse that tendency.

2. Leverage cognitive diversity

Diverse teams (across age, background, culture, training, etc.) bring different mental models, which is a strategic advantage, especially when facing novel challenges. However, inclusion is not enough. Minority perspectives must be actively amplified. If they don’t exist, they can be deliberately introduced through roles such as “devil’s advocates” or debates designed to surface dissent before consensus ossifies. These interventions only work if there is psychological safety. Individuals will not voice dissent if they fear it is socially or professionally risky. In addition, when high-status team members signal a preferred view, alternatives will quickly fade. Real learning requires a climate in which speaking up is not just permitted but enabled, expected and supported. This can be signaled with evidence events where everyone is invited and expected to bring different and challenging evidence. The more senior members show publicly that they are open to changing their minds, the more such cognitive diversity can take root across the team and organization. This is how diverse thinking can inform collective sensemaking.

3. Explore the power of counterfactuals

Counterfactual reasoning forces us to step outside current assumptions. “What if” questions challenge accepted facts or logic and create space for alternative explanations. For example: “What if our access to a critical resource drops by 30% for the next 18 months?” These provocations may initially seem unrealistic, but the more outlandish they feel, the more they push us to test and revise entrenched models. In real life, we can extend this logic with red-team/blue-team events common in cybersecurity tests where a red team attacks the existing security arrangements to test assumptions and find weaknesses. Alternatively, in a new product development, instead of asking, “What can we learn from the needs and wants of our customers?” we say, “What can we learn from those who choose not to buy from us?”

4. Flip causal logic

We often default to linear cause-and-effect reasoning, but new insights can emerge when we reverse that logic. Instead of asking how X causes Y, ask, “What if Y causes X?” For example, instead of assuming overeating causes obesity, ask, “What if obesity leads to overeating?” These inversions don’t need to be correct to be useful because they help expose blind spots, uncover assumptions, and broaden how we interpret complexity. For example, Charlie Munger’s inversion approach urges us to consider not just how to achieve an objective but how to avoid its opposite. It might also be helpful to ask questions that turn symptoms into causes (like in Toyota’s “five-why” root cause analysis: “Why did the machine fail? A tripped fuse. Why did the fuse trip?”), but fully flipping causal direction is a more powerful way of challenging automatic thinking patterns and identifying hidden assumptions.

Audio available

Audio available