Human-AI synergy: Deciding When Humans Stay in the Loop

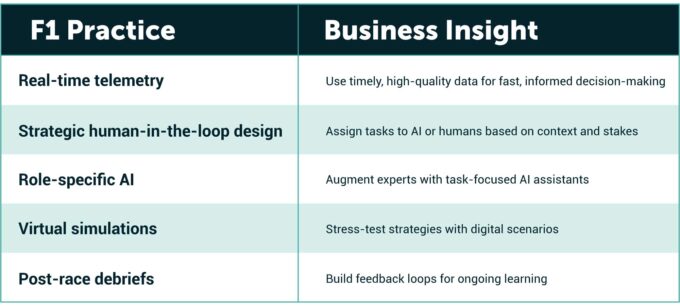

Formula 1 teams rely heavily on advanced telemetry and AI to gain a competitive edge. Modern F1 cars stream over a million data points per second via hundreds of onboard sensors, and cloud computing platforms such as Oracle Cloud process this flood of information to run billions of real-time strategy simulations. These simulations factor in dynamic variables like weather, track temperature, and tire degradation to help determine optimal race strategies. AI-driven dashboards distil this complexity, surfacing only the most actionable insights to engineers, guiding decisions like when to pit or how to adjust a driver’s pace to manage thermal and mechanical stress.

Beyond race strategy, AI models enable predictive maintenance by analyzing historical and live telemetry data to anticipate component failures before they happen, often making the difference between a podium finish and a DNF (Did Not Finish) due to mechanical breakdown. Some teams even use generative AI during races to parse hundreds of pages of FIA (Fédération Internationale de l’Automobile) sporting and technical regulations, extracting relevant clauses in seconds to ensure compliance or identify strategic loopholes. Historically, dedicated engineers were burdened with the demanding task of analyzing thousands of pages of intricate regulations and assessing numerous past incidents to make rapid, race-defining decisions.

Yet despite this digital horsepower, F1 teams don’t hand full control over to machines. Human engineers remain central to interpreting AI outputs, applying domain expertise and situational judgment to override or refine algorithmic recommendations. To support this balance, Formula 1 teams delegate continuous monitoring and predictive diagnostics, such as spotting brake imbalance or anticipating tire degradation, to AI, since these tasks are data-heavy, time-sensitive, and cognitively overwhelming for humans in real time. In contrast, decisions around race strategy, pit stop timing, or rule interpretation often involve ambiguity or legal implications. Here, humans stay in the loop to apply domain expertise, intuition, and situational judgment to override or refine AI outputs, ensuring context and discretion. It is equally important that humans receive the right information at the right time, without being overwhelmed with unnecessary detail or left under-informed. F1 demonstrates that optimal decision systems are hybrid by design, leveraging AI for speed and scale while relying on humans for nuanced interpretation and strategic decision-making.

This human-in-the-loop model aligns with research that highlights how even the most sophisticated AI tools in Formula 1 can underperform or mislead when their sociotechnical context is ignored. Failure often arises not from flaws in the algorithms per se, but from the misalignment between AI outputs and human interpretative practices, a reminder that synergy depends not just on performance, but on relational understanding.

This collaboration between human intuition and AI precision illustrates a core principle in F1: peak performance arises not from full automation, but from augmenting human decision-making with intelligent systems.

Audio available

Audio available