The simple technology that reads facial expressions may be great for business, but there are ethical concerns as well as question marks about its reliability

My facial muscles were getting a good workout, as I contorted them into different expressions. I thought I was putting on my “happy” face, but my facial expression was interpreted as being fearful. I next tried to hiss like a cat, showing my teeth and scrunching up my nose. This expression was interpreted as being angry. I spent the next five minutes trying to express the six basic emotional states: anger, contempt, happiness, surprise, disgust, and fear.

I was exploring a website, emojify.info, which was launched this month by researchers hoping to raise awareness and encourage dialogue about emotion AI, an emerging technology that is raising alarm amongst ethicists while whetting the appetite of businesses and investors. “We need to be having a much wider public conversation and deliberation about these technologies,” DrAlexa Hagerty, project lead and researcher at the University of Cambridge told The Guardian newspaper. “It claims to read our emotions and our inner feelings from our faces.”

It’s already here

While humans might currently have the upper hand on reading emotions, machines are gaining ground using their own strengths. Emotion AI seeks to learn and read emotions by decoding facial expressions, analyzing voice patterns, monitoring eye movements, and measuring neurological immersion levels. The field dates back to at least 1995, with the work of MIT professor Rosalind Picard, and is starting to show promise with real use-cases.

Businesses are starting to explore emotion AI to improve customer and employee experiences, and analysts are projecting this technology to generate a $87bn market. Its ease of use, given that it can be done at a distance with nothing but a camera, could help businesses anticipate what type of products and services could be offered to consumers. One obvious use is in market research to assess how consumers react to advertising.

Market research agency Kantar Millward Brown records facial expressions footage and analyzes their expressions frame by frame to assess their mood. “You can see exactly which part of an advert is working well and the emotional response triggered,” Graham Page, managing director of offer and innovation at Kantar Millward Brown, told the BBC.

Increasingly, emotion AI has moved beyond advertising and is being used in situations such as job hiring, airport security and even education. During the COVID-19 pandemic, some schools in Hong Kong leveraged emotion AI to gauge whether students remained engaged in doing their homework. While they were studying at home, the AI measured students’ facial muscle points using their computer cameras to identify their emotions. Combined with other data points such as how long students took to answer questions and their prior academic history, the AI program generated a report that included a forecast of their grades.

Who’s the boss?

“The paradigm is not human versus machine, it’s really machine augmenting human,” explained Rana el Kaliouby, CEO of Affectiva, in an interview with MIT. But experts are skeptical as to who is really in control.

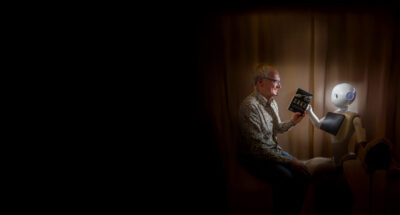

Take the example of Cogito, an artificial intelligence program designed to help customer service agents, by listening to the tone, pitch, word frequency, and hundreds of other factors. When it hears a strained tone, the program sends a message to the agent with a pink heart, “Empathy cue: Think about how the customer is feeling. Try to relate.” Machines using emotional AI are no longer just attempting to interpret human emotion, they are also starting to simulate those emotions and, in turn, prodding humans to be more empathetic.

Cogito executives describe their software as a type of coaching software, which TIME journalist Alejandro de la Garza, describes as “unsettling.” As de la Garza points out, “If AI can ‘coach’ the way we speak, how much more of our lives may soon be shaped by AI input? And what do we really understand about the way that influence works?”

Audio available

Audio available