Large language models (LLMs) are not magic boxes. They predict the next words based on patterns, and they work best when we set the scene before asking for help.

To deploy them usefully in the workplace, configure first, then prompt. Choose a privacy setup that fits the task, and be careful with connectors like calendar, drive, and email. Use agents and tools when they really help you to take a particular action. With these habits, AI becomes useful in day-to-day work.

These topics were discussed in the most recent meeting of the IMD Tech Community, a growing group of alumni who gather virtually for webinars to support each other in leading confidently with emerging technologies.

Context framing

LLMs are probabilistic systems. That means the same question can bring slightly different answers each time. They are great at language: explaining ideas, rewriting, summarizing, and drafting. They are not perfect at exact math or hard facts without help. Models come in different types and sizes, and some can work with text or other inputs. Bigger contexts can help, but too much irrelevant text can also confuse the result. The key is to give the right information at the right time.

Configure before you prompt

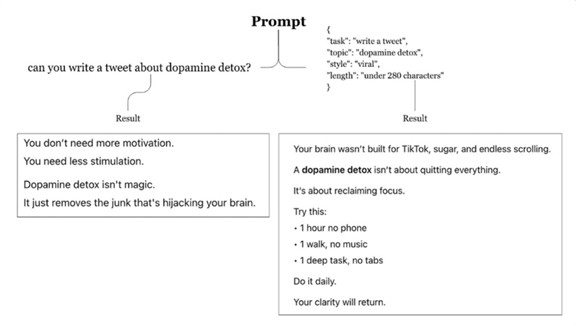

Before you type your main request, establish three critical things: context, role, and expectation.

- Context tells the model what the task is about and who the audience is.

- Role tells the model who to pretend to be (for example, a tutor, pricing analyst, or project manager).

- Expectation tells the model how to answer (format, length, and rules).

When you define these three elements, you give the model a clear framework within which to work, thereby reducing the likelihood of hallucination.

Other successful strategies include personalizing the assistant and using memory for preferences (if it helps).

- If you don’t want your chats used to improve models, turn off “Improve the model for everyone” in ChatGPT data controls or the equivalent in other LLMs.

- Ask for clear formats like bullet points or JSON when structure matters.

- Keep instructions short and direct. Small talk wastes tokens and can confuse the goal.

Example: Sales proposal first draft (based on real cases – anonymized and modified)

We asked GenAI to write the first version of a sales offer. We told it who the customer is, what they care about, and our usual winning points. It gave us a clear two-page draft.

- Context: “Write a first draft for a sales offer for our medical devices to [Company X] in the healthcare industry. Focus on their top three goals of patient safety, positive surgery outcomes, and maximizing operational efficiency.”

- Role: “Write like our proposal writer. Use our winning points of clinically proven performance, user-centric design, and rapid response support. Keep it short and clear. Benefits first.”

- Expectation: “Give us a two-page draft and a five-item benefits table (owner, proof, metric).”

- Result: First draft time went down by 90%, freeing up time for deeper (GenAI-assisted) research on second round submissions (June–July 2025).

Privacy is an architectural decision

People often ask about safety, but they usually mean privacy – how to keep their data private when using an LLM.

The answer lies in design – what connectors are on, what the AI can see, and how long data is kept. It depends on the architecture you choose, and particularly on the connectors you turn on and the retention rules you follow.

Common patterns include:

- Tenant-native (e.g., Copilot) inside a Microsoft 365 tenant using Graph – convenient, but each connector (Calendar, OneDrive/SharePoint, email) opens new paths to information.

- Vendor APIs with a gateway/proxy to remove personal data, block risky content, and log what goes out.

- Self-hosted small models that can anonymize or pre-process text before sending it to larger external models.

- Private stack with tools behind managed servers, exposing only minimal capabilities for the most sensitive work.

“Before you link AI to your calendar, files, or email, ask yourself: Do we need this, and what could go wrong?”

Action: Choose your privacy posture per workload.

- Use tenant-native tools for everyday tasks, with least-privilege connectors.

- Use a gateway when calling outside APIs so you can redact sensitive data.

- Use a small local model to anonymize text before it leaves your boundary.

- Keep a private stack for high-sensitivity tasks with minimal external calls.

Beyond chat: agents, tools, and realistic next steps

People want actions, not just words. This is where agentic workflows connect models to tools and permissions.

- Decompose the task into steps that a tool can accomplish.

- Keep permissions tight and auditable.

- Separate decisions (the model) from actions (the tools).

- Add a short review step before something important runs.

Example: Invoices checked faster (based on real cases, anonymized): Generative AI model reads incoming invoices and performs three-way reconciliation by comparing purchase order (PO) vs. Goods receipt note (GRN) vs. Invoice. It completed the accounting form in the ERP and prepared the entry. A person validated and clicked ‘approve’ before anything was posted. Result: fewer manual clicks (down around 80%), time saved (5–7 hours/week), and mistakes stayed low (< 2% over four weeks) vs. the manual process.

Retrieval that respects structure

When the model needs facts from your files, retrieval quality depends on structure. If tables and PDFs are messy, answers suffer. Organize sources first, keep tables clean, and break long files into chunks that keep meaning.

Example: Pricing Q&A bot (based on real cases, anonymized): We cleaned a messy price list and split a long policy PDF into clear sections. After that, the AI could answer the question of how much more accurately. Result: in tests, correct answers went from 62% to 94% and replies were 45% faster.

“LLMs are great at language. They still need to be set up to work well.”

A simple stepwise framework

- Set your frame – context, role, and expectation.

- Be clear about privacy – pick a posture for this task.

- Keep connectors lean – add only what you truly need and review what each one exposes.

- Separate words from actions – model decides, tools act, permissions stay tight, add a review step.

- Prepare your sources – structure files so retrieval works.

- Measure time-to-useful – track setup and re-work you avoid.

Playbook

- Make a one-page “prompt brief” everyone can reuse: Context (who/why), Role (“act as…”), Expectation (what format, length, tone). Share the template.

- Treat each connector as a design decision with an owner, scope, retention, and review cycle.

- Pick a privacy posture per workload: tenant-native, gateway with redaction, small local model to anonymize, or a private stack.

- Design agentic work with guardrails: break tasks into steps, keep permissions minimal, log actions, and require a pre-action plan.

- Structure sources before retrieval: consistent tables, logical chunks, extra care with PDFs.

- Educate teams on what LLMs do well (language) and where guardrails matter (actions, exact math).

- Add a small proof box per use case showing baseline to end result, sample size, and time window (e.g., “RFP drafting reduced from 6.5 hours to 3.2 hours across 18 bids, Sep–Oct 2025”).

- Track value by time saved and the number of retries avoided.

Takeaway

Across the examples mentioned previously, teams saw meaningful time savings and fewer reworks over the trial period.

You don’t need a moonshot to make AI useful. Treat the model like a skilled writer that needs a clear brief. Configure first, then prompt. Think about privacy as a plan, especially when you add connectors. Move beyond chat only where it adds real value, and give your tools tight permissions with a simple review step.

Do these things, and AI will start saving you time every week.

This article is based on the IMD Tech Community Session: AI Personal Productivity Session 01: Introduction to prompt engineering

Session speakers

John Joyce is a software consulting leader who connects business and data, designs agentic AI workflows, and delivers always-on value across banking, telco, healthcare, and retail.

Omar Nicholas Achment is Group Head of Risk, IMS, and ESG. He brings a consulting background in investment banking technology, along with entrepreneurial and application development experience.

Christophe Meili is an entrepreneur and global account manager for agentic automation solutions. He drives strategic innovation and operational efficiency for enterprise clients worldwide.

Please join us for one of our next live discussions. You can find a full list of upcoming events here.

Stay connected via LinkedIn or send us your ideas at t[email protected]. Let us know if you’d like to join – or co-create the next chapter.